For various reasons, sometimes it is useful for a hypervisor to access data served by a virtualized guest system. In this example, it's Proxmox accessing NFS shares on a TrueNAS virtual machine. TrueNAS could just as easily be replaced with Openmediavault or any other NAS server, but the concept of recursive storage access remains the same. NFS in this example could also be replaced with iSCSI, or even SMB.

NOTE: Running TrueNAS (or other storage systems) virtualized, rather than on bare metal hardware is not universally a good idea, and you must have a specific hardware and software setup that allows it to work properly, otherwise you may encounter instability, or in some cases catastrophic data loss. PCI passthrough of your HBA storage controller and/or drives is required. Certain motherboards have varying support or issues with this, so do your due diligence before attempting!

In this install, the specific requirements were for a single hypervisor server, with a NAS VM guest which controls a ZFS drive pool directly, and which serves several data shares over NFS to the Proxmox hypervisor. The NFS shares are used for VM install images (ISO and boot files), virtual machine and LXC container root filesystems storage, and container application data storage. All VMs and containers auto-start when Proxmox boots.

Installation of Proxmox is very straightforward, and I won't cover that here, nor creation of the NAS VM and installation of its software (TrueNAS, etc.).

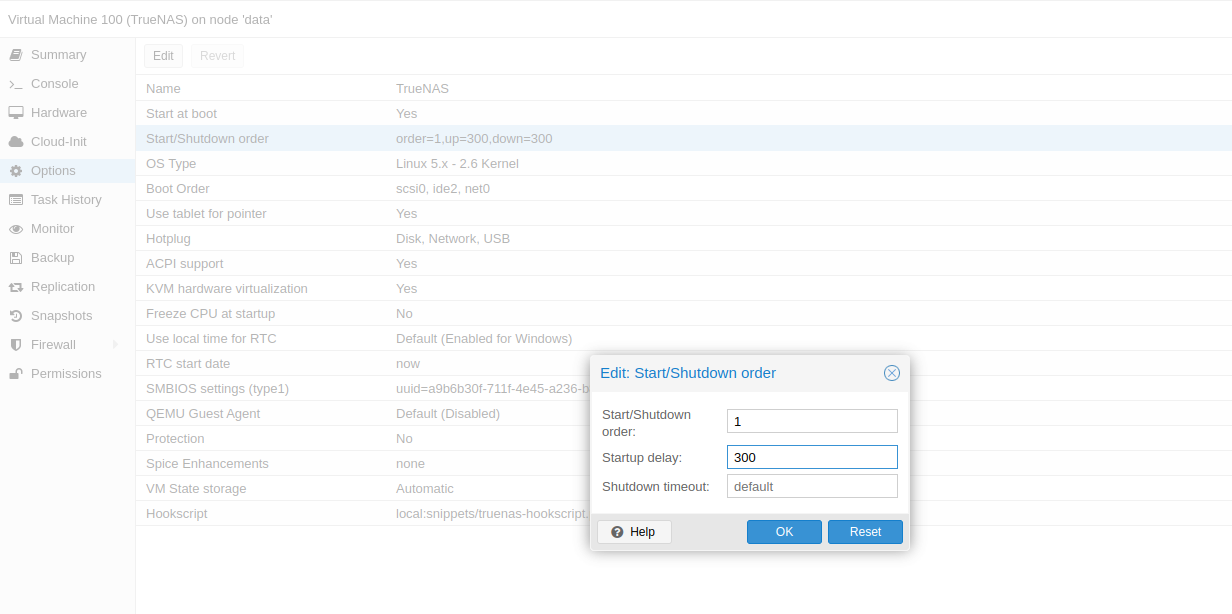

The main hurdle to overcome with this setup is making sure the NFS shares are available when needed. This is solved fairly simply by specifying the VM startup order of the NAS VM to be first, and adding a boot delay before starting any other VMs or containers. You can implement this easily using the Proxmox web interface:

Or using the console (assuming the NAS VM ID is 100):

qm set 100 --onboot 1 --startup order=1,up=300

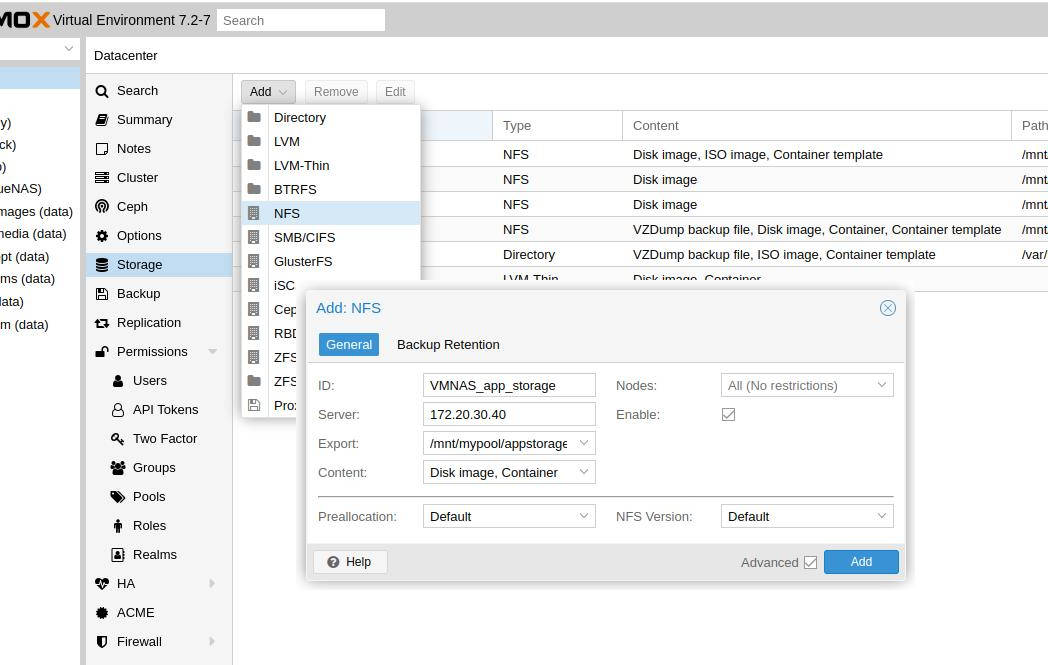

Once the NAS VM is running, add your NFS shares (or iSCSI or SMB shares) as storage locations in Proxmox, just as you would if they were hosted externally. I make a point to begin the storage ID[s] (mount points) with an identifying prefix that allows them to be distinguished from storage mounts not running on a virtualized guest i.e. VMNAS_storage1, VMNAS_iso_images, VMNAS_app_storage, etc. Add your NFS mounts using the Proxmox web interface:

Or via the console:

pvesm add nfs VMNAS_iso_images --server 172.20.30.40 \

--path /mnt/pve/VMNAS_iso_images --export /mnt/mypool/isos \

--content iso

pvesm add nfs VMNAS_app_storage --server 172.20.30.40 \

--path /mnt/pve/VMNAS_app_storage --export /mnt/mypool/appstorage \

--content images,rootdir

Once your NFS storages are added, Proxmox should automatically mount them when they become available after the NAS VM boots up. The startup delay will allow time for the NAS to boot and make the NFS shares available before Proxmox attempts to start the other VMs and containers.

At this point, the system is mostly setup, and Proxmox can happily access the NFS shares provided by the NAS guest, however, there is a problem: If the Proxmox hypervisor host is shutdown without manually stopping all the VMs and containers that are using the storage, then unmounting the NFS shares from Proxmox, what will happen is this: Proxmox will shut down all the dependent VMs, then lastly it will shutdown the NAS VM, but the NFS shares will still be mounted by Proxmox. The shutdown will fail and hang, since by the time Proxmox tries to unmount the NFS shares (late in the shutdown process), the virtualized NAS guest will have already (correctly) been shut down, and thus be completely inaccessible to Proxmox.

To deal with this, I use Proxmox hookscripts. A hookscript allows Proxmox to perform certain actions at various points of a VM lifecycle (pre-start, post-start, pre-stop, and post-stop). There is an example Perl hookscript provided by Proxmox which we can copy to the default Proxmox snippets directory under a new filename:

cp /usr/share/pve-docs/examples/guest-example-hookscript.pl /var/lib/vz/snippets/mynas-hookscript.pl

Edit the hookscript, and in the pre-stop section, we can add some code to unmount our NFS storage locations before the NAS VM is shutdown. You can manually specify each mountpoint:

system("umount /mnt/pve/remote_storage_nfs");

system("umount /mnt/pve/VMNAS_iso_images");

Or if you follow my recommendation to prefix each NFS mount with something like VMNAS_, you can get a bit fancy so that you won't have to modify your hookscript if you add other NFS mounts from the VM guest later:

my @mountpoints = </mnt/pve/VMNAS_*>;

foreach my $mp (@mountpoints) {

print "Unmounting NAS NFS share: " . $mp;

system("umount " . $mp);

}

Finally, tell Proxmox to run the hookscript when starting/stopping the NAS VM guest (at present this is only possible using the commandline, not the web interface):

qm set 100 -hookscript local:snippets/mynas-hookscript.pl

When Proxmox ever stops the NAS VM, either manually or during a reboot/poweroff process, the hookscript will run and cause the NFS shares to be unmounted before stopping the VM. Note that the hookscript will not run if the VM is stopped internally, only by Proxmox. In other words, if you run poweroff or reboot inside the NAS VM's console, the hookscript will not run, and the NFS shares will become unresponsive to Proxmox. But the setup I've described will allow the system as a whole to startup and shutdown cleanly, without hangs or issues due to the recursive storage system.

Comments

Another way to find all NFS mounts

If you don't have your mounts nicely named the findmnt command can be used to list all nfs mounts as well

my @mountpoints = `findmnt -t nfs4 --df -o TARGET -n`;

foreach my $mp (@mountpoints) {

print "Unmounting NAS NFS share: " . $mp;

system("umount " . $mp);

}

Add new comment